One of the fastest-growing sectors in extended reality (XR) has inarguably become augmented reality (AR), which is used extensively among enterprises to conduct remote collaboration and inspections.

The AR industry has seen several crucial advancements in eye and hand tracking, gestures, deployment platforms, and greater interoperability for components and software, leading to huge developments for use cases and technological innovations.

As the Metaverse moves from ‘virtual’ reality to the next significant communications platform, combining spatial computing with the Internet, AR will become a key component of enterprise solutions.

For our XR Today round table, we are pleased to welcome:

- Hugo Swart, Vice-President and General Manager of XR and Metaverse of Qualcomm Technologies

- Jonathan Reeves, Chief Executive and Founder of Arvizio

- Dr Brad Quinton, Chief Executive and CTO of Singulos Research

Our esteemed panellists have discussed the role of their AR solutions in the greater XR market, ongoing trends shaping the industry, as well as their views on the future of the Metaverse.

XR Today: What sets your AR solution apart from the competition? What has your company considered when designing hardware and software solutions for devices?

Hugo Swart: Qualcomm has a unique role in enabling and supporting the entire ecosystem as a horizontal player, which sets us apart.

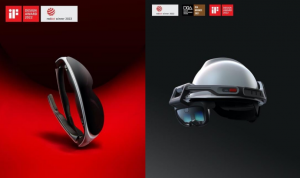

We deliver best-in-class system-on-a-chip (SoC) platforms that power over 50 XR devices and offer the software and perception algorithms needed to enable XR experiences. We also provide reference device hardware to allow our customers to go to market quickly and a lot of other ecosystem initiatives.

Hugo Swart, Vice-President and General Manager of XR

In terms of considerations when designing hardware, we work very closely with all of our partners to assess the end-user needs and build a platform that will meet and surpass those requirements.

On the software and developer ecosystem side, I would like to point to our Snapdragon Spaces XR Developer Platform born from Qualcomm’s commitment to helping enable and scale head-worn AR, especially at the dawn of the Metaverse.

We wanted to help reduce developer friction and provide a uniform set of AR features independent of device manufacturers or distribution methods.

Jonathan Reeves: Arvizio is an AR software provider with solutions that operate across a range of AR devices, including mobile devices and AR smart glasses.

We believe the market requires a cross-platform approach for software solutions that can operate with a variety of smart glasses and mobile devices. This avoids scenarios where the customer is locked into a single vendor for AR smart glasses.

Dr Brad Quinton: We deliberately designed the Perceptus Platform to support a diverse set of hardware platforms. From Android and iOS mobile phones and tablets to AR glasses, the Perceptus Platform can provide an understanding of objects in their 3D environment in a consistent framework for AR application developers.

Our key considerations were creating a scalable training process that allowed AR designers to quickly and easily define their objects of interest while also making sure our solution could run in real-time using only edge hardware, avoiding the need to transfer sensitive user data to the cloud.

XR Today: Which trends do you see taking shape in the AR sector, and which aspects of AR do you believe are more advanced and which are lagging?

Hugo Swart: A trend we see taking shape and would like to accelerate is the shift from smartphone AR to head-worn AR, which is the intent with Snapdragon Spaces.

The open ecosystem approach allows Snapdragon Spaces developers to build their head-worn AR experience once and have it scale to a range of devices and content distribution channels. Once Snapdragon Spaces becomes available to all developers, we think this will help spur a new trend and era of head-worn AR experiences spanning entertainment, gaming, education, training, health and beyond!

Jonathan Reeves: AR glasses typically fall into two key categories based on their ability to provide spatial mapping. Devices such as Microsoft’s HoloLens 2 and Magic Leap can scan a room and use advanced simultaneous localization and Mapping (SLAM) algorithms to anchor AR content in place with a degree of accuracy.

This can apply in scenarios such as when the wearer moves their head, the content remains anchored in a fixed position. Other AR smart glasses lack spatial mapping and may not provide the degree of accuracy required for enterprise use cases.

Jonathan Reeves, Founder and Chief Executive of Arvizio

To date, achieving accurate spatial mapping has relied on depth-sensing cameras to build a 3D mesh of the space, much like LiDAR sensors in the iPhone Pro and iPad Pro have demonstrated.

To reduce the cost and weight of AR smart glasses, vendors are actively working on SLAM-based tracking approaches using stereoscopic cameras to deliver accurate tracking at a reduced cost.

This is challenging to achieve across a broad range of lighting conditions, but will lead to a significant reduction in cost, size, and weight.

A second key requirement for widespread adoption is hand gesture recognition. Devices such as HoloLens 2 have set the bar for this type of mixed reality (MR) interaction, and low-cost devices entering the market will need to offer a similar level of hands-free operation.

Dr Brad Quinton: The trends we see taking place in the AR sector are that many of the underlying AR hardware challenges are rapidly being resolved with maturing optics, high-speed wireless connectivity and high-quality virtual object rendering.

Where we see AR lagging is in the use of artificial intelligence (AI) to understand the context of the user’s AR experience, to provide high-value, contextually aware experiences and applications.

All modern mobile processors have high-performance neural accelerators, but for the most part, they have yet to be deployed in a meaningful way for AR because of the lack of appropriate tools, platforms and software.

XR Today: Why is interoperability a key component of tailoring your AR solutions for multiple purposes? How has your company accommodated versatility for your clients, both for deployment and continued support?

Hugo Swart: There are many facets to interoperability and our chips are designed to interoperate with multiple display types and technologies, for example.

Another interoperability angle is the support for OpenXR, as we want to make it as frictionless as possible for developers to create immersive experiences. Snapdragon Spaces is also designed, leveraging existing developer tools, to create 3D content like Unity and Epic game engines.

Jonathan Reeves: Arvizio software solutions for AR have been designed to work across a variety of AR devices, including AR smart glasses and mobile devices.

We currently support HoloLens 2, Magic Leap, and iOS and Android devices, and expect to add additional devices supported by Qualcomm’s Snapdragon Spaces initiative in the coming months.

Regarding the ongoing COVID-19 pandemic, remote collaboration has been a key driver in the use of AR and the crisis has made this necessary for business continuity.

Arvizio offers two solutions: the Immense 3D software solution and AR Instructor. Our Immerse 3D software allows multiple users to work with 3D models across locations for design reviews and stakeholder collaboration. Additionally, our AR Instructor offers step-by-step work instruction and remote expert “see-what-I-see” video sharing for additional guidance and work validation.

Dr Brad Quinton, Chief Executive and CTO of Singulos Research

Dr Brad Quinton: Interoperability is key for us because there is still no de-facto standard on AR hardware. We believe that it will be important to support a variety of hardware and operating systems in the near-to medium-term as users and application developers learn which hardware works best for them and their usage scenarios.

XR Today: What are your company’s thoughts on the Metaverse? When do you expect a solid foundation for the platform, and what would it look like?

Hugo Swart: We truly believe in the potential of the Metaverse and that Qualcomm is your ticket to it. Qualcomm has been investing in the underlying and core technologies to enable the Metaverse for over a decade, and we will continue to do so to help all our partners build and realize its full potential.

We are enabling our customers’ different Metaverse ecosystems and deploying our own with Snapdragon Spaces, so we believe the foundation is being built and something will come to fruition in the not-so-distant future.

Jonathan Reeves: We do not see a single Metaverse meeting the needs of all, but rather a set of Metaverse categories with several approaches being offered in each.

We believe four categories of Metaverse will emerge — Industrial, Business, Social, and Gaming — and in each category, there will be a variety of solutions and vendors, each vying for leadership. We believe this is a far more likely outcome than a single, dominant Metaverse platform.

Dr Brad Quinton: We believe that the Metaverse will be fundamentally personal and anchored in our own physical spaces. We see a continuity between AR and immersive VR, where users will select the minimum amount of immersion to achieve the task and experience they want, merging the value of the Metaverse with the comfort of physical reality.

Rather than having to pay the cost of immersion as an entry fee to the Metaverse, they will instead move through an AR-first Metaverse that transitions to immersive experiences when it makes sense.

We believe that mobile processors with advanced AI hardware coupled to 5G networks will be the platform for AR-first Metaverse in the next 1-3 years.