Recapping the AREA/DMDII 2nd Enterprise AR Workshop

The Augmented Reality Enterprise Alliance (AREA) and the Digital Manufacturing and Design Innovation Institute (DMDII), a UI LABS collaboration recently hosted the 2nd Enterprise AR workshop at the UI Labs in Chicago. With over 110 attendees from enterprises who have purchased and are deploying AR solutions, to providers offering leading-edge AR solutions, to non-commercial organisations, such as universities and government agencies

“The goal of the workshop is to bring together practitioners of Enterprise AR to enable open and wide conversation on the state of the ecosystem and to identify and solve barriers to adoption,” commented Mark Sage, the Executive Director of the AREA.

Hosted at the excellent UI LABS and supported by the AREA members, the attendees enjoyed two days of discussions, networking, and interactive sessions.

Here’s a brief video summary capturing some of the highlights.

Introduction from the Event Sponsors

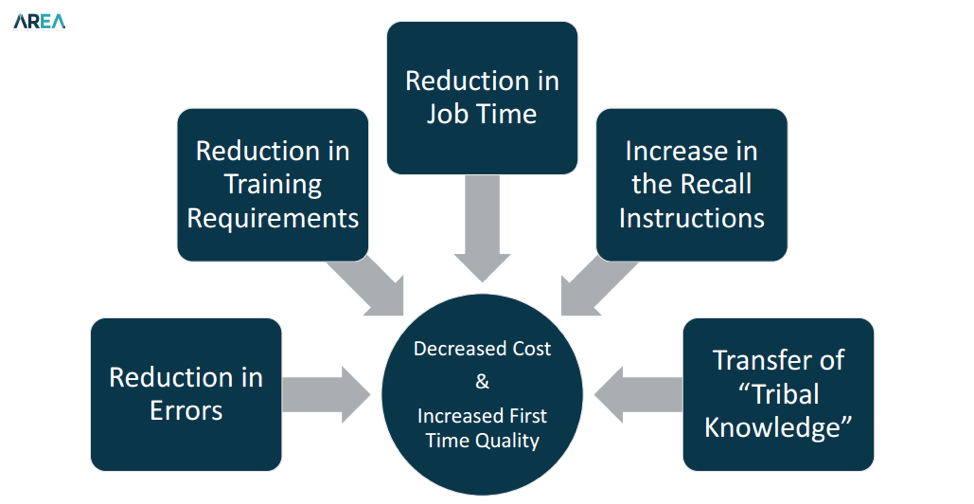

Sponsored by Boeing and Upskill, the workshop was kicked off by Paul Davies, Associate Technical Fellow at Boeing and the AREA President. His introduction focused on the status of the Enterprise AR ecosystem, highlighting the benefits gained from AR and some of the challenges that need to be addressed.

Summary of AR Benefits

Mr Davies added, “We at Boeing are pleased to be Gold sponsors of this workshop. It was great to listen to and interact with other companies who are working on AR solutions. The ability to discuss in detail the issues and potential solutions allows Boeing and the ecosystem to learn quickly.”

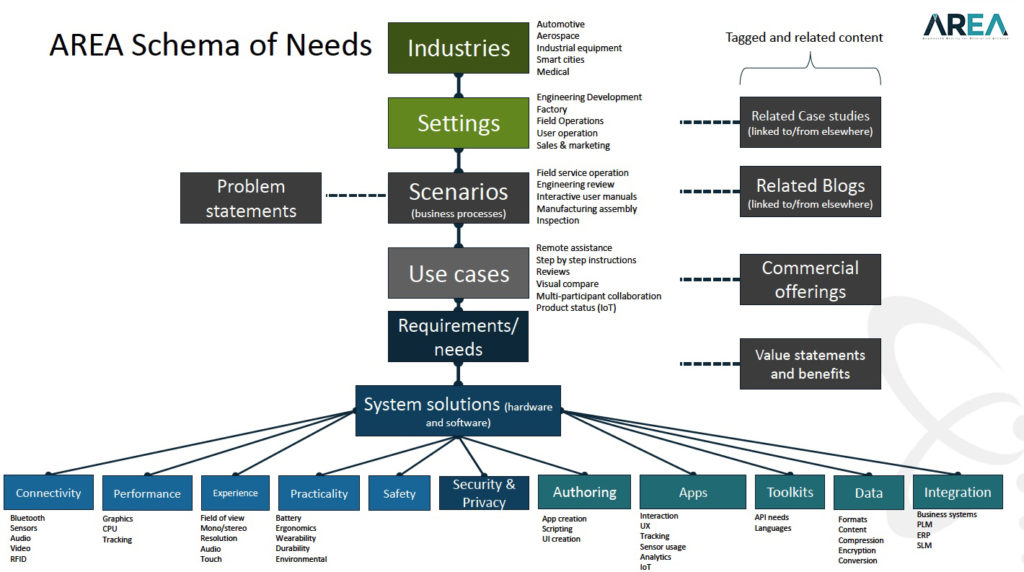

Developing the Enterprise AR Requirements Schema

The rest of the day focused on brainstorming and developing a set of use cases that the AREA will build on to create the AREA requirements / needs database and ultimately be added to the AREA Marketplace. The session was led by Glen Oliver, Research Engineer from AREA member Lockheed Martin, and Dr. Michael Rygol, Managing Director of Chrysalisforge.

The attendees were organized into 17 teams and presented with an AR use case (based on the use cases documented by the AREA). The teams were asked to add more detail to the use case and define a scenario (a definition of how to solve the business problems often containing a number of use cases and technologies).

The following example was provided:

- A field service technician arrives at the site of an industrial generator. They use their portable device

to connect to a live data stream of IoT data from the generator to view a set of diagnostics and

service history of the generator. - Using the AR device and app they are able to pinpoint the spatial location of the reported error code

on the generator. The AR service app suggests a number of procedures to perform. One of the

procedures requires a minor disassembly. - The technician is presented with a set of step-by-step instructions, each of which provides an in-context 3D display of the step.

- With a subsequent procedure, there is an anomaly which neither the technician nor the app is able to diagnose. The technician makes an interactive call to a remote subject matter expert who connects into the live session. Following a discussion, the SME annotates visual locations over the shared display, resulting in a successful repair.

- The job requires approximately one hour to perform, meaning the portable device should function without interruption throughout the task.

- With the job complete, the technician completes the digital paperwork and marks the job complete

(which is duly stored in the on-line service record of the generator).

*blue = use case

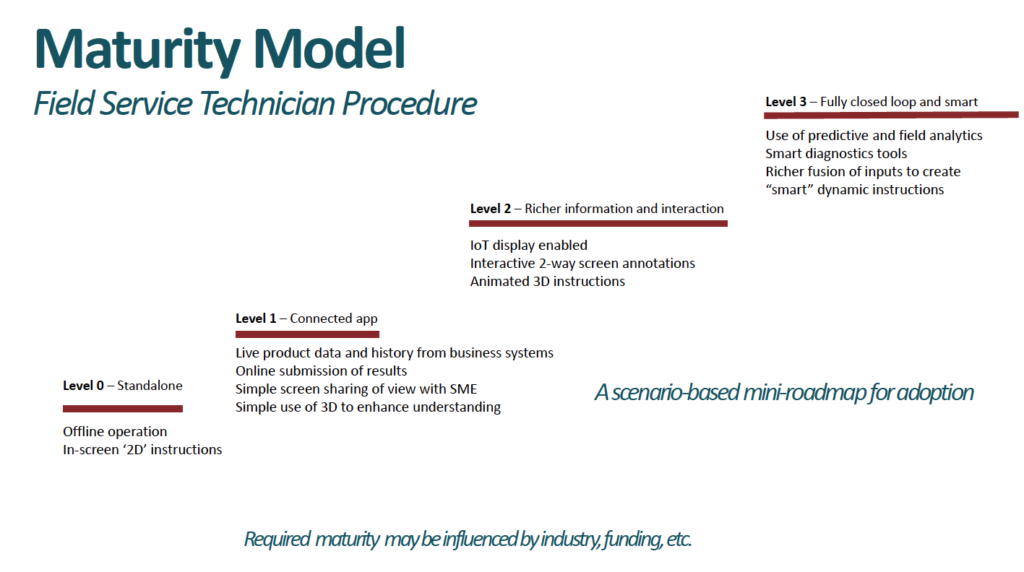

The tables were buzzing with debate and discussion with a lot of excellent output. The use of a maturity model to highlight the changes in scenarios was a very useful tool. At the end of the session the table leaders were asked to present their feedback on how useful the conversations had been.

Technology Showcase and Networking Session

The day ended with a networking session where the following companies provided demos of their solutions:

Day 2: Focus on Barriers to AR Adoption

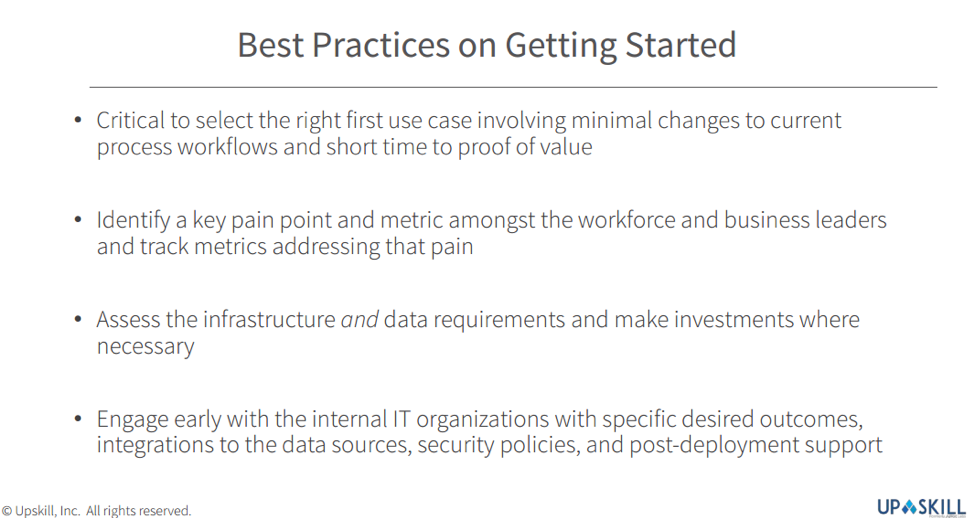

The second day of the workshop started with an insightful talk from Jay Kim, Chief Strategy Officer at Upskill (event Sliver sponsors) who outlined the benefits of Enterprise AR and how to avoid “pilot purgatory” (i.e., the continual cycle of delivering pilots with limited industrialisation of the solution).

Next, Lars Bergstrom, at Mozilla Research, provided a look into how enterprises will soon be able to deliver AR experiences to any AR device via a web browser. The attendees found the session very interesting to understand the potential of WebAR and how it might benefit their organisations.

Barriers to Enterprise AR Adoption – Safety and Security

The next two sessions generated discussion and debate on two of the key barriers to adoption of Enterprise AR. Expertly moderated by the AREA Committee chairs for:

- Security – Tony Hodgson, Bob Labelle and Frank Cohee of Brainwaive LLC

- Safety – Dr. Brian Laughlin, Technical Fellow at Boeing

Both session provided an overview of the potential issues for enterprises deploying AR and providers building AR solutions. Again, many attendees offered contributions on the issues, possible solutions and best practice in these fields.

The AREA will document the feedback and share the content with the attendees, as well as using it to help inform the AREA committees dedicated to providing insight, research and solutions to these barriers.

Barriers to Enterprise AR Adoption – Change Management

Everyone was brought back together to participate in a panel session focusing on change management, both from an organisation and human perspective.

Chaired by Mark Sage, the panel included thought leaders and practitioners:

- Paul Davies – Associate Technical Fellow at Boeing

- Mimi Hsu – Corporate Digital Manufacturing lead at Lockheed Martin

- Beth Scicchitano – Project Manager for the AR Team at Newport News Shipbuilding

- Jay Kim – Chief Strategy Officer at Upskill

- Carl Byers – Chief Strategy Officer at Contextere

After a short introduction, the questions focused on “if AR should be a topic discussed at the CEO level or by the IT / Innovation teams.” After insightful comments from the panel, the audience was asked to provide their input.

Questions then focused on how to convince the workforce to embrace AR. Boeing, Newport News Shipbuilding and Lockheed Martin provided practical and useful examples.

There followed a range of questions from the audience with the panel members offering their experiences in how their organisations have been able to overcome some of the change management challenges when implementing AR solutions.

Final Thoughts

The general feedback on the two days was excellent. The ability to share, debate and discuss the potential and challenges of Enterprise AR was useful for all attendees.

The AREA; the only global, membership-funded, non-profit alliance dedicated to helping accelerate the adoption of Enterprise AR by supporting the growth of a comprehensive ecosystem and its members to develop thought leadership content, reduce the barriers to adoption and run workshops to help enterprises effectively implement Augmented Reality technology to create long-term benefits.

Will continue to work with The Digital Manufacturing and Design Innovation Institute (DMDII), where innovative manufacturers go to forge their futures. In partnership with UI LABS and the Department of Defense, DMDII equips U.S. factories with the digital tools and expertise they need to begin building every part better than the last. As a result, more than 300 partners increase their productivity and win more business.

If you are interested in AREA membership, please contact Mark Sage, Executive Director.

To inquire about DMDII membership, please contact Liz Stuck ([email protected]), Director of Membership Engagement